One Kafka cluster across 2 DCs or 2 synchronized

It’s not uncommon to have 2 data centers if you want to have on-prem high availability. Primary may host everything and secondary just fit all business critical services in case there is a failure of 1st DC. Let’s say we want to have highly available Event Bus with 2DCs. Should we have one cluster or two?

One cluster across 2 DCs

We may start with basic cluster built with 3 Zookeepers and 3 brokers. We need uneven number of nodes for voting purposes — majority wins and take a decision. More mature cluster would have 5 Zookeepers and 5 brokers. If you have more brokers (data nodes) you have more space for topics. In Kafka architecture you have one broker acting as a leader and followers. A leader is an active owner of a set of topics. These are replicated to followers. If you have more nodes they are more chatty at the network level. If you have 2 nodes in one DC and 3 in another you need to have a very good network between data centers — low latency and reliable. Probably you don’t have 2 DC in the same geographical region — this doesn’t make sense considering energy outage and natural disaster. Do you have high quality and expensive colocation services giving you 1ms ping between DC via AES encrypted VPN with 2 redundant links? If yes, then cool. If no the network might be a bottleneck and a single point of failure. You can create a overlay network spanned across 2 DCs and the location would not be visible to Kafka nodes on the Layer 7 of OSI model, however with the advent of unexpected and sudden network failure you will see that after it resolved, your brokers need to resync and you may need to rebalance them manually. I don’t say no — I just present the risk.

2 clusters in 2 DCs

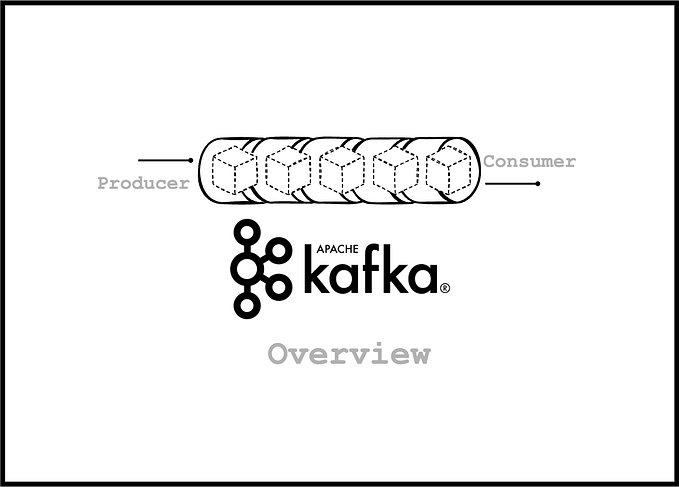

Kafka should be always available for the producer to push data. Kafka is used as a buffer (hey — it’s a messaging/queueing system, so it’s obvious). Consumer of the stored data may not be immediately available to take it, however should be able to read it as soon as it’s available. In Kafka client configuration we have bootstrap servers and on the brokers’ side we have advertised listeners. Client connects to the first alive broker from the connection string. This broker fetches a list of all alive brokers from Zookeeper and passes it to the client. You may contact one broker and get a list of many. What’s more — advertised listeners may have different host names not matching with the bootstrap server. In the connection string you may have “kafka-cluster-primary:9092,kafka-cluster-secondary:9092” and load balanced entries of kafka-cluster-primary and kafka-cluster-secondary. Load balancer would redirect TCP/IP traffic to the brokers of primary and secondary cluster. There is something like data locality. If you are in DC2 (secondary) it doesn’t make sense to push data to kafka-cluster-primary in DC1. You can configure DNS resolving of kafka-cluster-primary to point to DC1 cluster for DC1 clients and to DC2 cluster for DC2 clients. Cool, isn’t it?

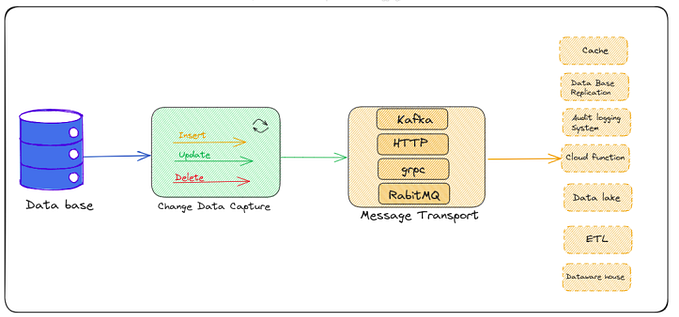

Now, my Kafka producer in DC1 wants to publish some data in DC1. There is a consumer interested in this data in DC2. We need Mirror Maker or any other Kafka consumer+producer acting as a synchronization mechanism between DCs. We have one-way synchronization DC1 to DC2. What if we have other producer of the same data type in DC2 and another consumer in DC1? We need to synchronize DC2 with DC1. Won’t we get a loop? DC1-DC2-DC1-DC2-.. No, we just need to add in Kafka headers source of the synchronized message. If the synchronizer in DC2 sees that the message was synchronized from DC1 to DC2 it won’t send it back to DC1.

With Mirror Maker(s) you may decide which topics you want to replicate and which not, so you minimize network traffic.

If you have a 5 node clusters across DCs then on the broker side you have min.insync.replicas=4. You can’t have 3 because you have 2 nodes in DC1 and 3 in DC2. With value 3 it would be possible that data is not copied into other DC. On the client side you have acks=all. It means that what is pushed by the producer may be copied 3 times more to the other data center. Now if you have 2 clusters and Mirror Maker you consume from DC1 broker, push to DC2 broker and inside DC2 network the data is replicated. The bandwidth usage is really reduced. You can use acks=1, but it’s too risky (unless you can lose data).

Kafka on Kubernetes

You can have a Kafka cluster created on Kubernetes with overlay network spanned across 2 DCs. It can be created even with k8s Operator (https://operatorhub.io/operator/banzaicloud-kafka-operator, https://operatorhub.io/operator/strimzi-kafka-operator) or Helm chart (https://bitnami.com/stack/kafka/helm). Red Hat sells rebranded Strimzi (https://www.redhat.com/en/resources/amq-streams-datasheet) but not for the clients coming from the street. Bitnami didn’t want to sell me commercial support last year. Traditional Big Data (Hadoop) vendors provide commercial support because Kafka is used as a cool transport layer. And of course there is the cloud with multi-region replication and data encryption at rest. If you are not bound to the Danish war law anymore you can go with the cloud.